Fluentd with Kafka (1/2) Use cases

Use cases : Why is “Data streaming platform” crucial?

In enterprise, processing streaming data in real time sometimes gets critical. A good example is “SecOps“. If you are an operator of SecOps, you need to capture the events which may cause security incidents in order to take actions, such as blocking suspicious access by changing security configuration, once you get event triggers. “Event-driven” system addresses this by getting those data in real time basis and responding quickly to the events happening.

Another use case would be “Event sourcing”. If you run website and use external content from a third party provider, you need to detect an update of their content in a timely way, which allows you to keep your web site up-to-date with the latest content. “Event-driven” system helps you track state changes in a third party content provider and feed messages to web sites and/or applications for further actions.

Approach : What do we need? “Fluentd + Kafka”

For those applications deployed often distributed in multi-clouds and/or as microservices, building a data streaming platform handling data from sources to destinations in real time is critical. The platform needs to be capable of decoupling sources and destinations in order to flexibly manage those routings in more dynamic ways. That’s where “Fluentd + Kafka” comes in. Combination of Fluentd and Kafka allow you to independently manage those data streaming policies to bring your operational scalability to the next level.

In this series of “Fluentd with Kafka” blogs, I walk through use cases and approaches for data streaming platforms with Fluentd and Kafka and introduce steps on how to configure Fluentd and Kafka for enterprise companies.

(1) Use cases and Approach (this post)

(2) Steps to configure Fluentd and Kafka

What is Apache Kafka?

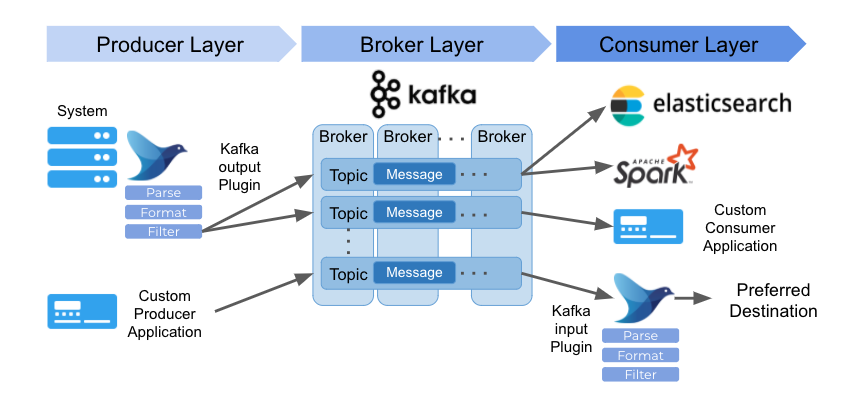

Apache Kafka is an open-source distributed event streaming platform used by thousands of companies for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications. Kafka consists of mainly three components, Broker, Producer and Consumer.

Broker : storage layer which manages messages with high availability and scalability.

Producer : client applications that publish (push) messages to Broker.

Consumer : client applications that subscribe (pull) messages from Broker.

In the Kafka cluster, you can create different “topic” depending on the use cases and messages are managed with partitions and replications in each topic. Client applications will publish and subscribe messages in the topics asynchronously and that makes it easier to distribute messages from one application to others without dependencies. In addition, Kafka provides client SDK in various languages and that ensures you can transport messages across applications running in a distributed environment.

You can find more information of Kafka in Kafka Documentation and typical use cases in Kafka : Use Cases.

How Fluentd works with Kafka

Fluentd delivers “fluent-plugin-kafka” for both input and output use cases. An input plugin works as Kafka Consumer and subscribes messages from topics in Kafka Brokers. On the other hand, an output plugin has Kafka Producer functions and publishes messages into topics.

One of the use cases is making online event notification from system logs where low latency is required. In this use case, input plugins of Fluentd collect log information, such as rsyslog, and filter messages required for event notification and route them into Kafka Broker via output plugin of “fluent-plugin-kafka”. Once messages are stored in Kafka Broker, any client applications can subscribe to messages and handle them as required.

For those applications deployed often distributed in multi-clouds and/or as microservices, building a data streaming platform handling data from sources to destinations in real time is critical. The platform needs to be capable of decoupling sources and destinations in order to flexibly manage those routings in more dynamic ways. That’s where “Fluentd + Kafka” comes in. Combination of Fluentd and Kafka allow you to independently manage those data streaming policies to bring your operational scalability to the next level.

In this series of “Fluentd with Kafka” blogs, I walk through use cases and approaches for data streaming platforms with Fluentd and Kafka and introduce steps on how to configure Fluentd and Kafka for enterprise companies.

(1) Use cases and Approach (this blog)

(2) How to configure Fluentd and Kafka (coming soon)

How Fluentd works with Kafka

Fluentd delivers “fluent-plugin-kafka” for both input and output use cases. An input plugin works as Kafka Consumer and subscribes messages from topics in Kafka Brokers. On the other hand, an output plugin has Kafka Producer functions and publishes messages into topics.

One of the use cases is making online event notification from system logs where low latency is required. In this use case, input plugins of Fluentd collect log information, such as rsyslog, and filter messages required for event notification and route them into Kafka Broker via output plugin of “fluent-plugin-kafka”. Once messages are stored in Kafka Broker, any client applications can subscribe to messages and handle them as required.

Considerations when using Fluentd with Kafka

Regardless of use case, you need to ensure security when you manage streaming data in the enterprise. For data transportation, you need to make sure that all data is securely transported to protect against interception by 3rd parties as well as consider that the communications must be done between trusted components. You also need to take care of the availability of messages.

Here are key feature of Fluentd and Kafka for enterprise :

Secure data transportation between all components

Kafka Broker provides encryption functions with SSL.

Kafka plugin of Fluentd can use custom SSL keys to communicate with Kafka.

Ensure credibility of both input and output data source

Verify identification of data sources if they have access permission.

Kafka Broker supports SASL authentication mechanisms.

Kafka plugin of Fluentd can access Kafka Broker with SASL authentication.

Ensure all messages are transferred without any loss

Kafka Broker can replicate messages between clusters.

Fluentd has a buffer plugin which brings availability and efficiency in messages. With buffer plugin, you can make messages persistent and manage retry and flushing to prevent data loss when system failure happens.

You can learn details of security feature of Kafka in Introduction to Apache Kafka Security.

We will describe how to get started and configure Fluentd with Kafka in the next blog post.

Looking for more use cases to work on? Try our Fluent Bit use case of using ‘tail’ plugin and parsing multiline log data.

Commercial Service - We are here for you.

In the Fluentd Subscription Network, we will provide you consultancy and professional services to help you run Fluentd and Fluent Bit with confidence by solving your pains. Service desk is also available for your operation and the team is equipped with the Diagtool and knowledge of tips running Fluentd in production. Contact us anytime if you would like to learn more about our service offerings.